Meet Native Texture Synthesis

Generate coherent, seamless materials and textures directly in 3D space.

Latent Color Diffusion

Consistent, seamless textures across complex geometry.

Seamless Texture Generation as

Latent Color Diffusion

Zeqiang Lai1,2★, Yunfei Zhao2★,

Zibo Zhao2, Xin Yang2

Xin Huang2, Jingwei Huang2,

Xiangyu Yue1†, Chunchao Guo2†

1MMLab, CUHK · 2Tencent Hunyuan

★ Equal contribution † Corresponding authors

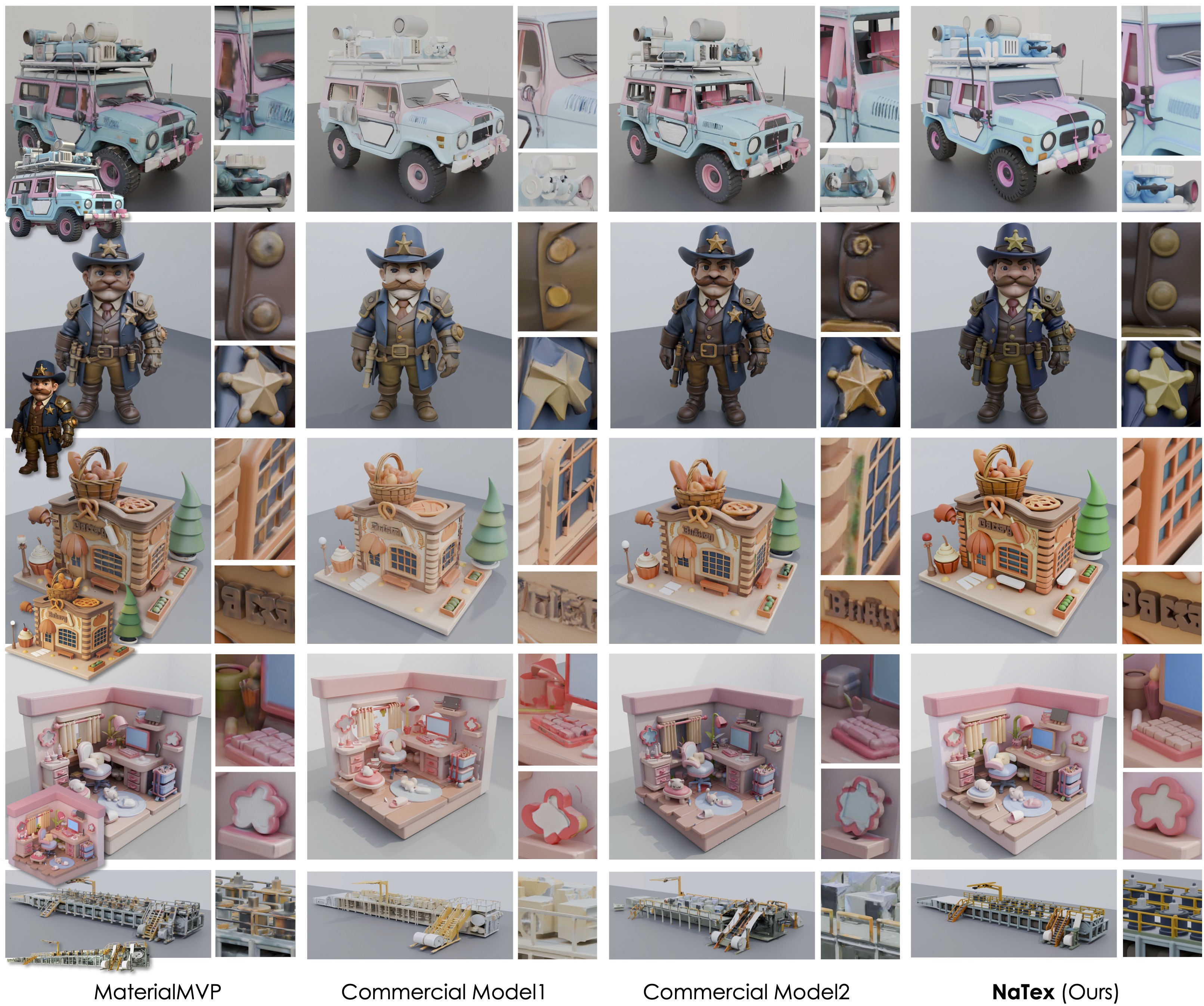

Comparing NaTex with previous Multi-View Diffusion (MVD) methods on core challenges

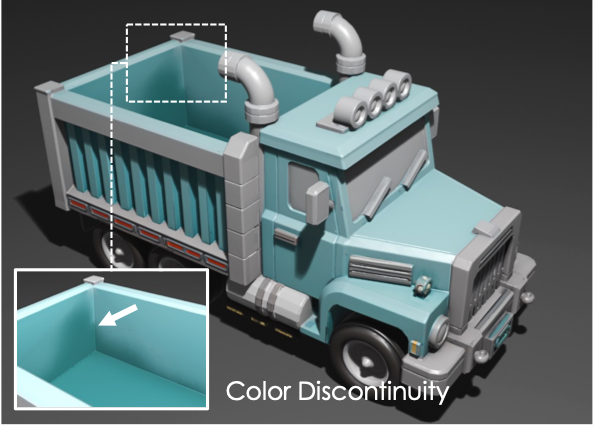

Challenge: Occlusion regions are an inevitable aspect of multi-view texturing; no matter the approach, they cannot be entirely avoided.

Inconsistent texture in occluded regions (white arrows). Visible discontinuities between overlapping surfaces.

Seamless texture continuity across occluded regions. Consistent color and pattern throughout the entire surface.

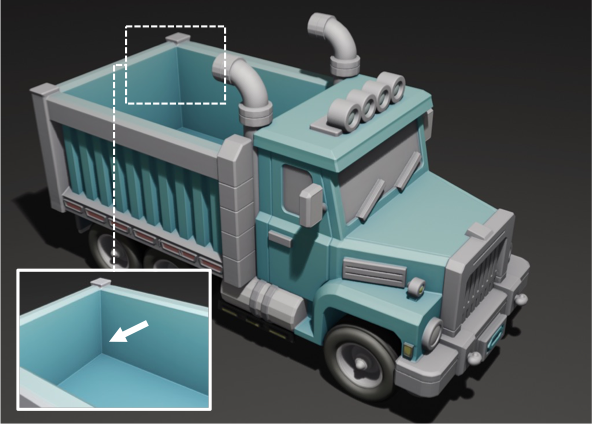

Challenge: MVDs have difficulties in achieving precise alignment of texture features with fine-grained geometric details.

Pattern misalignment and color discontinuities at object boundaries.

Native texture generation ensures seamless alignment. No notable seams between object boundaries.

Challenge: Maintaining consistency across multiple views is a costly process, and even state-of-the-art video models struggle to achieve satisfactory results in this regard.

Fragmented texture patterns. Inconsistent color across the model surface.

Global texture coherence. Uniform and consistent color across the entire model.

All results are generated with the same input image and geometry. Images are cropped to highlight key differences.

NaTex directly predicts RGB color for given 3D coordinates via a latent diffusion approach, a paradigm that has shown remarkable effectiveness in image, video, and 3D shape generation, yet unexplored for texture generation.

To mitigate the computational challenges of performing diffusion directly on a dense point cloud, we propose a color point cloud Variational Autoencoder (VAE) with a similar architecture to 3DShape2VecSet. Unlike 3DShape2VecSet, which focuses on shape autoencoding, our model operates on color point clouds.

We design a dual-branch VAE that extends the color VAE with an additional geometry branch, encoding shape cues to guide color latent compression. In this way, geometry tokens are deeply intertwined with color tokens, enabling stronger geometric guidance during color generation at test time.

Together, these adaptations yield an efficient autoencoder that achieves over 80× compression, enabling efficient scaling for subsequent diffusion transformer (DiT) generation.

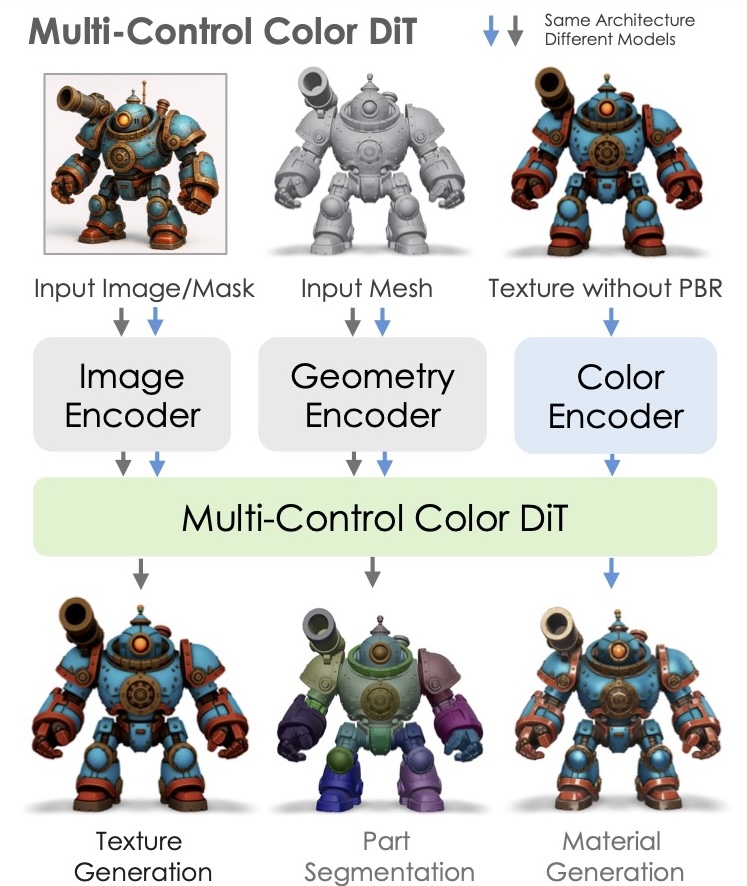

We introduce a multi-control color DiT that flexibly accommodates different control signals. We integrate precise surface hints into DiT via pairwise conditional geometry tokens, implemented through positional embeddings and channel-wise concatenation.

Our design enables a wide range of applications beyond image-conditioned texture generation (with geometry and image controls) to textureconditioned material generation and texture refinement (using an initial texture, named as color control). Notably, NaTex exhibits remarkable generalization capability, enabling image-conditioned part segmentation and texturing even in a training-free manner.

Key Advantage: Global context modeling for 3D-aware texture synthesis

Click on the tabs above to switch between VAE and DiT architecture details.

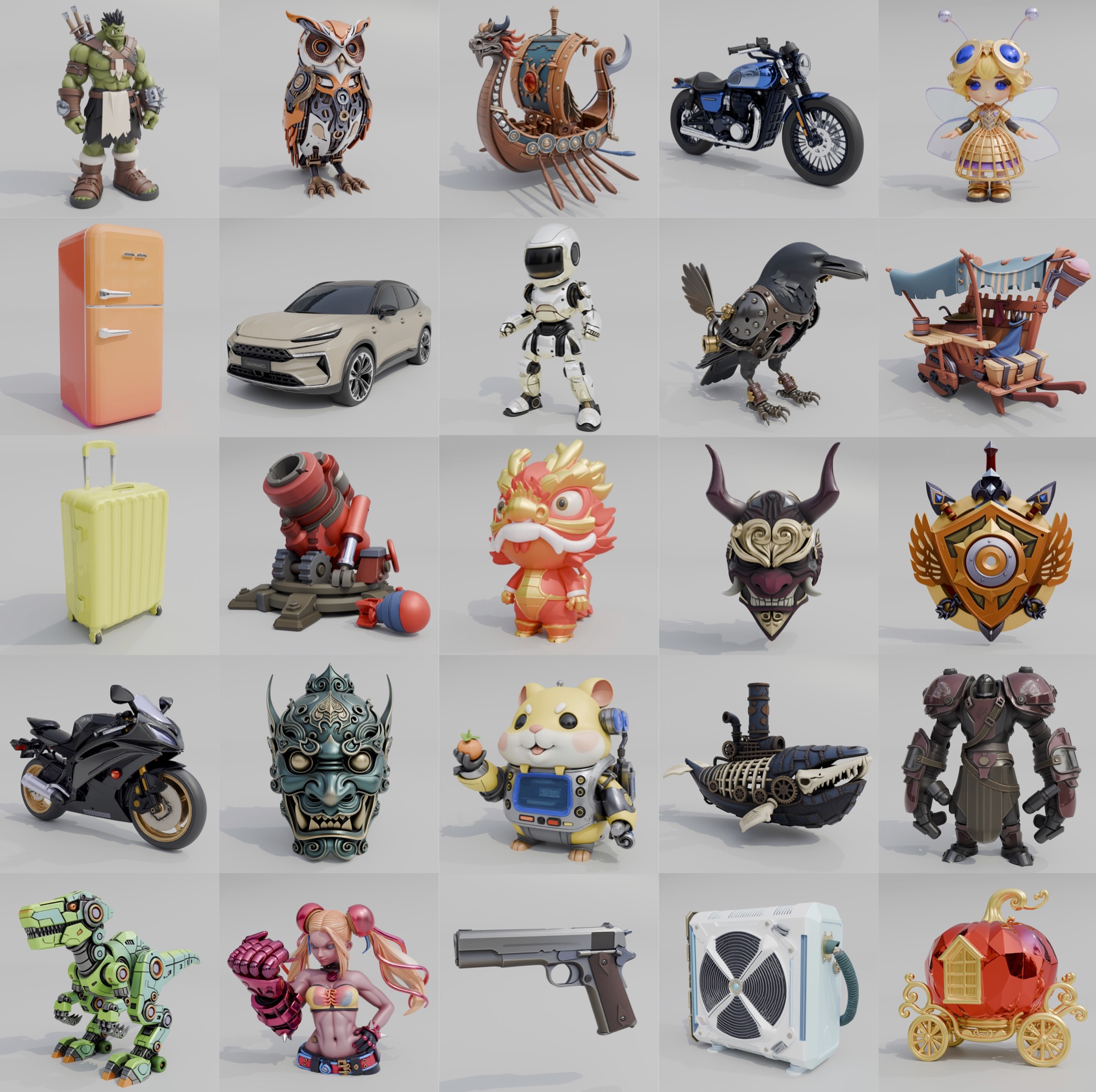

NaTex outperforms state-of-the-art methods in texture alignment and coherence and occlusion handling.

Commercial models use their own geometries, while other methods share the same geometry from Hunyuan3D 2.5. All methods are rendered with albedo only.

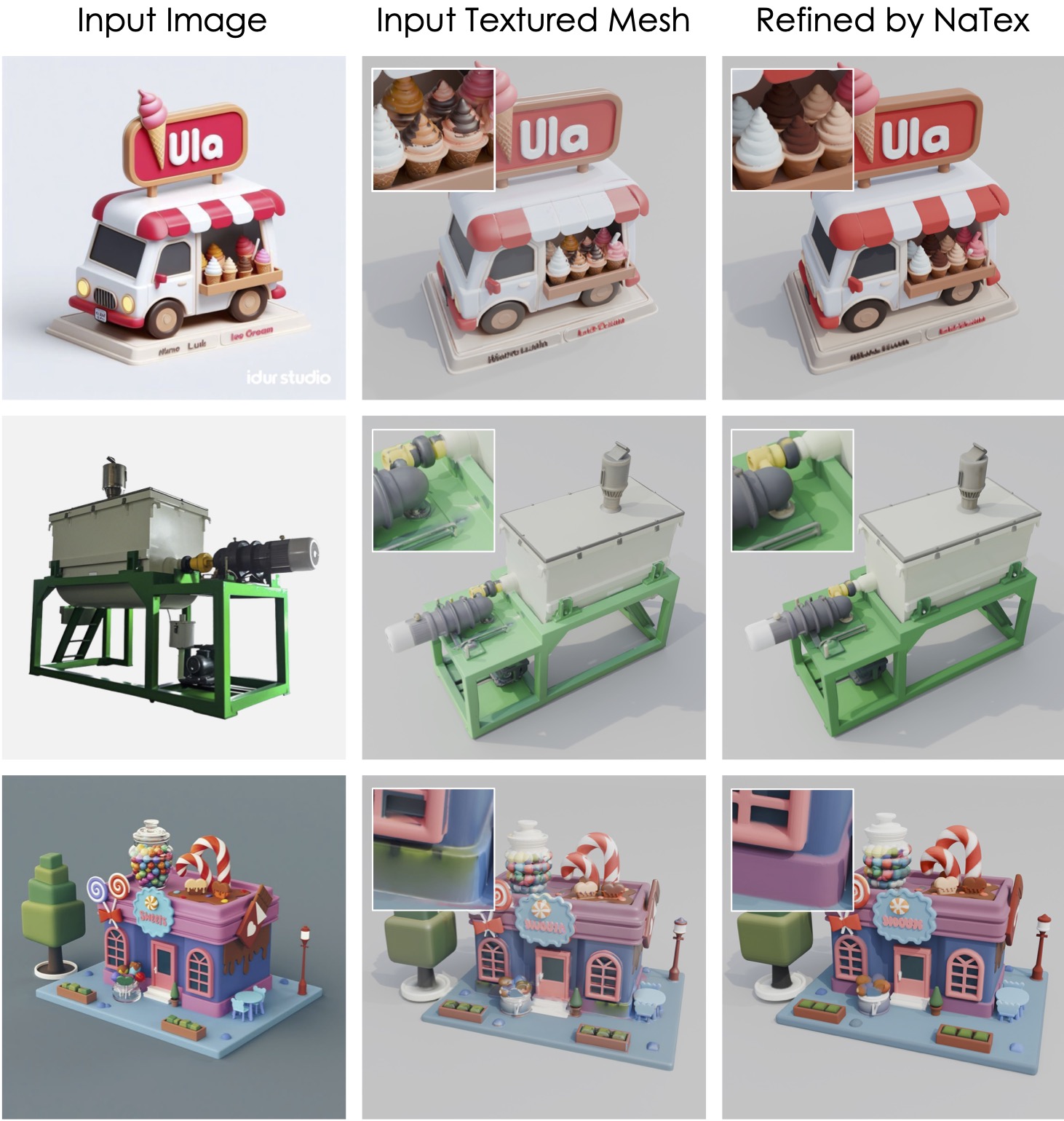

Broader Application of NaTex. Explore how our technology extends to various 3D content creation tasks.

Generate high-fidelity, physically plausible materials with realistic textures and properties. NaTex's latent color diffusion framework could be readily extended for capturing material characteristics like roughness and metallic.

Our model with color control can be viewed as a neural refiner that automatically inpaints occluded regions and corrects texture. M

Moreover, thanks to the strong conditioning, it can performs the process in just five steps, making it extremely fast and efficient for a wide range of downstream tasks that require intelligent refinement.

Our model can be easily adapted for part segmentation tasks. This can be achieved by feeding 2D segmentation of the input image into the model, allowing it to generate texture map that align with 3D part segmentation results.

Within our native texture framework, part generation is as straightforward as generating textures for the entire object, as we can predict color directly in 3D space for different part surfaces.